Lecture 03: Unix Design Philosophy and Stream Redirection

Table of Contents

1 Review: Pipelines

Last week, we looked at a number of really useful file processing tools for the Unix command line. We will now continue that discussion by focusing on command line tools that read and write to the terminal.

Let's start with two such command line tools, cat and

head. Recall that we use head like so:

#> head -3 sample-db.csv #first_name,last_name,company_name,address,city,county,state,zip,phone1,phone2,email,web James,Butt,Benton, John B Jr,6649 N Blue Gum St,New Orleans,Orleans,LA,70116,504-621-8927,504-845-1427,jbutt@gmail.com,http://www.bentonjohnbjr.com Josephine,Darakjy,Chanay, Jeffrey A Esq,4 B Blue Ridge Blvd,Brighton,Livingston,MI,48116,810-292-9388,810-374-9840,josephine_darakjy@darakjy.org,http://www.chanayjeffreyaesq.com

head takes an option for the number of lines to print from the

head of the file as well as an argument, the filename. As a well

defined Unix command, it can also take input through a pipeline.

#> cat sample-db.csv | head -3 (...)

Recall, that the cat command just prints the contents of the file

to the terminal, and in the above pipeline, that contents is piped

as input to head with the 3-line option. Thus, the above pipeline

performs the same tasks as providing the filename directly to

head.

Like head, cat also can read input along the pipeline, and

will print it back to the terminal. Consider the following example:

#> head -3 sample-db.csv | cat (...)

head outputs to the terminal the first three lines of the database

file, which is read as input by cat and then cat prints its

output to the screen. Recall that cat also concatenates, so we can

provide arguments to cat to concatenate it's input read along the

terminal with another input, say read from a file.

#>head -3 sample-db.csv | cat BeatArmy.txt - GoNavy.txt Go Navy! #first_name,last_name,company_name,address,city,county,state,zip,phone1,phone2,email,web James,Butt,Benton, John B Jr,6649 N Blue Gum St,New Orleans,Orleans,LA,70116,504-621-8927,504-845-1427,jbutt@gmail.com,http://www.bentonjohnbjr.com Josephine,Darakjy,Chanay, Jeffrey A Esq,4 B Blue Ridge Blvd,Brighton,Livingston,MI,48116,810-292-9388,810-374-9840,josephine_darakjy@darakjy.org,http://www.chanayjeffreyaesq.com Beat Army!

The - symbol along the arguments for cat indicates to use input

read from the terminal, and the total pipeline first takes the

3-line head from the database and concatenates that between the

contents of the files BeatArmy.txt and GoNavy.txt. Of course, we

could take this pipeline to the extreme:

#> cat sample-db.csv | cat | cat | cat | head -3 | cat BeatArmy.txt - GoNavy.txt Go Navy! #first_name,last_name,company_name,address,city,county,state,zip,phone1,phone2,email,web James,Butt,Benton, John B Jr,6649 N Blue Gum St,New Orleans,Orleans,LA,70116,504-621-8927,504-845-1427,jbutt@gmail.com,http://www.bentonjohnbjr.com Josephine,Darakjy,Chanay, Jeffrey A Esq,4 B Blue Ridge Blvd,Brighton,Livingston,MI,48116,810-292-9388,810-374-9840,josephine_darakjy@darakjy.org,http://www.chanayjeffreyaesq.com Beat Army!

Since each cat command will read input from the terminal and write

output to the terminal, we can just string any number of them

together and not affect the input until we get to the head command

and the last cat. The point of this exercise is to show how input

and output of simple commands can be combined to form more

complex Unix commands. This is a guiding philosophy of Unix.

2 Unix Design Philosophy

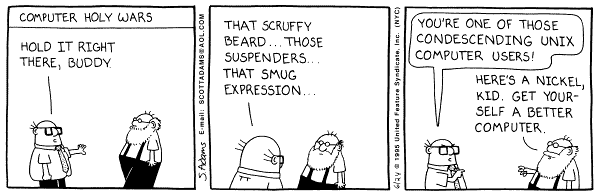

The Unix Design Philosophy is best exemplified through a quote by Doug McIlroy, a key contributor to early Unix systems:

This is the Unix philosophy: Write programs that do one thing and do it well. Write programs to work together. Write programs to handle text streams, because that is a universal interface.

All the Unix command line tools we've looked at so far meet this philosophy, and the tools we will program in the class will as well.

2.1 Write programs that does one thing and does it well

If we look at the command line tools for processing files, we see

that there is a separate tool for each task. For example, we do not

have a tool called headortail that can take either the first or

last lines of a file. We have separate tools for each of the

tasks.

While this might seem like extra work, it actually enables the user to be more precise about what he/she is doing, as well as be more expressive. It also improves the readability and comprehension of commands; the user doesn't have to read lots of command line arguments to figure out what's going on.

2.2 Write programs that work well together

The command line tools we look at also inter-operate really well

because they compliment each other. For example, consider some of

the pipelines you wrote in lab and how you can use cut to get a

field from structure data, then you can use grep to isolate some

set of those fields, and finally you can use wc to count how many

fields remain.

2.3 Write programs to handle text streams

Finally, the ability to handle text streams is the basis of the pipeline and what enables small and simple Unix commands to be "glued" together to form more complex and interesting Unix operations.

This philosophy leads to the development of well formed Unix command line tools that have the three properties:

- They can take input from the terminal through a pipeline or by reading an input file provided as an argument

- They write all their output back to the terminal such that it can be read as input by another command.

- They do not write error information to standard output in a way that can interfere with a pipeline.

This process of taking input from the terminal and writing output to the terminal is the notion of handling text streams through the pipeline. In this lecture, we will look at this process in more detail.

3 Standard Input, Standard Output, and Standard Error

Every Unix program is provided with three standard file streams or standard file descriptors to read and write input from.

- Standard Input (

stdinfile stream, file descriptor 0): The primary input stream for reading information printed to the terminal - Standard Output (

stdoutfile stream, file descriptor 1): The primary output stream for printing information and program output to the terminal - Standard Error (

stderrfile stream, file descriptor 2): The primary error stream for printing information to the terminal that resulted from an error in processing or should not be considered part of the program output.

3.1 Pipes and stdin, stdout, and stderr

Given the description of the standard file descriptors, we can better understand our pipelines with respect to the standard file descriptors.

Head writes to stdout--. .---the stdout of head is the stdin of cat

| |

v v

head -3 BAD_FILENAME | cat BeatArmy.txt - GoNavy.txt

\_/

|

A pipe just connects the stdout of

one command to the stdin of another

The pipe (|) is a semantic construct for the shell to connect the

standard output of one program to the standard input of another

program, thus piping the output to input.

The fact that input is connected to output in a pipeline actually

necessitates stderr because if an error was to occur along the

pipeline, you would not want that error to propagate as input to the

next program in the pipeline especially when the pipeline can

proceed despite the error. There needs to be a mechanism to report

the error to the terminal outside the pipeline, and that mechanism

is standard error.

As an example, consider the the case where head is provided a bad

file name.

#> head -3 BAD_FILENAME| cat BeatArmy.txt - GoNavy.txt head: BAD_FILENAME: No such file or directory <--- Written to stderr not piped to cat Go Navy! Beat Army!

Here, head has an error BAD_FILENAME doesn't exist, so head

prints an error message to stderr and does not write anything to

stdout, and thus, cat only prints the contents of the two files

to stdout. If there was no stderr, then head could only report

the error to stdout and thus it would interfere with the pipeline;

head: BAD_FILENAME: No such file or directory

is not part of the first 3 lines of any file.

3.2 Redirecting stdin, stdout, and stderr

In addition to piping the standard file streams, you can also

redirect them to a file on the filesystem. The redirect symbols

is > and <. Consider a dummy command below:

cmd < input_file > output_file 2> error_file

This would mean that cmd (a fill in for a well formed Unix

command) will read input from the file input_file, all output

that would normally go to stdout is now written to output_file,

and any error messages will be written to error_file. Note that 2

and the > together (2>) indicates to redirect file descriptor

2, which maps to stderr (see above).

You can also use redirects interspersed in a pipeline like below.

cmd < input_file | cmd 2> error_file | cmd > output_file

However, you cannot mix two redirects for the same standard stream, like so:

cat input_file > output_file | head

This command will result in nothing being printed to the screen

via head and all redirected to output_file. This is because the > and

< redirects always take precedence over a pipe, and the last >

or < in a sequence takes the most precedence. For example:

cat input_file > out1 > out2 | head

will write the contents of the input file to the out2 file and

not to out1.

Output redirects will automatically truncate the file being

redirected to. That is, it will essentially erase the file and

create a new one. There are situations where, instead, you want to

append to the end of the file, such as cumulating log files. You

can do such output redirects with >> symbols, double greater-then

signs. For example,

cat input_file > out cat input_file >> out

will produce two copies of the input file concatenated together in

the output file, out.

4 Reading and Writing to /dev/null and other /dev's

There are times when you are building Unix commands that you want to redirect your output or error information to nowhere … you just want it to disappear. This is a common enough need that Unix has built in files that you can redirect to and from.

Perhaps the best known is /dev/null. Note that this file exists in

the /dev path which means it is not actually a file, but rather a

device or service provided by the Unix Operating System. The

null device's sole task in life is to turn things into null or

zero them out. For example, consider the following pipeline with

the BAD_FILENAME from before.

#> head -3 BAD_FILENAME 2> /dev/null | cat BeatArmy.txt - GoNavy.txt Go Navy! Beat Army!

Now, we are redirecting the error from head to /dev/null, and

thus it goes nowhere and is lost. If you try and read from

/dev/null, you get nothing, since the null device makes things

disappear. The above command is equivalent to touch since head

reads nothing and then writes nothing to file, creating an empty

file.

head /dev/null > file.

You may think that this is a completely useless tool, but there are

plenty of times where you need something to disappear – such as

input or output or your ic221 homework – that is when you need

/dev/null.

4.1 Other useful redirect dev's

Unix also provides a number of device files for getting information:

/dev/zero: Provide zero bytes. If you read from/dev/zeroyou only get zero. For example the following writes 20 zero bytes to a file:

head -c 20 /dev/zero > zero-20-byte-file.dat

/dev/urandom: Provides random bytes. If you read from/dev/urandomyou get a random byte. For example the following writes a random 20 bytes to a file:

head -c 20 /dev/urandom > random-20-byte-file.dat

4.2 (Extra) A note on the /dev directory and the OS

The files you find in /dev are not really files, but actually

devices provided by the Operating System. A device generally

connects to some input or output component of the OS. The three

devices above (null, zero, and urandom) are special functions

of the OS to provide the user with a null space (null), a

consistent zero base (zero) , and a source of random entropy

(urandom).

If we take a closer look at the /dev directory you see that there

is actually quite a lot going on here.

alarm hidraw0 network_throughput ram9 tty13 tty35 tty57 ttyS2 vboxusb/ ashmem hidraw1 null random tty14 tty36 tty58 ttyS20 vcs autofs hpet oldmem rfkill tty15 tty37 tty59 ttyS21 vcs1 binder input/ parport0 rtc@ tty16 tty38 tty6 ttyS22 vcs2 block/ kmsg port rtc0 tty17 tty39 tty60 ttyS23 vcs3 bsg/ kvm ppp sda tty18 tty4 tty61 ttyS24 vcs4 btrfs-control lirc0 psaux sda1 tty19 tty40 tty62 ttyS25 vcs5 bus/ log= ptmx sda2 tty2 tty41 tty63 ttyS26 vcs6 cdrom@ loop0 pts/ sg0 tty20 tty42 tty7 ttyS27 vcsa cdrw@ loop1 ram0 sg1 tty21 tty43 tty8 ttyS28 vcsa1 char/ loop2 ram1 shm@ tty22 tty44 tty9 ttyS29 vcsa2 console loop3 ram10 snapshot tty23 tty45 ttyprintk ttyS3 vcsa3 core@ loop4 ram11 snd/ tty24 tty46 ttyS0 ttyS30 vcsa4 cpu/ loop5 ram12 sr0 tty25 tty47 ttyS1 ttyS31 vcsa5 cpu_dma_latency loop6 ram13 stderr@ tty26 tty48 ttyS10 ttyS4 vcsa6 disk/ loop7 ram14 stdin@ tty27 tty49 ttyS11 ttyS5 vga_arbiter dri/ loop-control ram15 stdout@ tty28 tty5 ttyS12 ttyS6 vhost-net dvd@ lp0 ram2 tpm0 tty29 tty50 ttyS13 ttyS7 watchdog dvdrw@ mapper/ ram3 tty tty3 tty51 ttyS14 ttyS8 watchdog0 ecryptfs mcelog ram4 tty0 tty30 tty52 ttyS15 ttyS9 zero fb0 mei ram5 tty1 tty31 tty53 ttyS16 uinput fd@ mem ram6 tty10 tty32 tty54 ttyS17 urandom full net/ ram7 tty11 tty33 tty55 ttyS18 vboxdrv fuse network_latency ram8 tty12 tty34 tty56 ttyS19 vboxnetctl

You will learn more about /dev's in your OS class, but for now you

should know that this is a way to connect the user-space with the

kernel-space through the file system. It is incredibly powerful and

useful, beyond just sending stuff to /dev/null.

What each of the files are is the input to some OS process. For

example, each of the tty information is a terminal that is open on

the computer. The ram refer to what is currently in the computer's

memory. The dvd and cdrom, that is the file that you write and

read to when connecting with the cd/dvd-rom. And the items under

disk, that a way to get to the disk drives.